Dealing With Data

16 January 2014 | By

In the first run of the Large Hadron Collider, almost a billion proton-proton collisions took place every second in the centre of the ATLAS detector. That amounts to enough data to fill 100,000 CDs each second. If you stacked the CDs on top of each other, in a year it would reach the moon four times. Only a small fraction of the observed proton–proton collisions have interesting characteristics that might lead to discoveries.

How does ATLAS deal with this mountain of data? How will it deal with the higher collision rates – resulting in almost twice as much data – planned for the next run in 2015?

Before being sent to storage, the collision events with potentially interesting physics must be selected. This selection process is carried out by the ATLAS Trigger system.

"The Higgs boson discovery has changed the landscape and the focus is now on measuring its properties," says David Francis, Trigger and Data Acquisition System project leader. "ATLAS has defined what it wants to analyse as the highest priority and our improved trigger system will be optimised to select these events as much as possible."

Until now, ATLAS' Trigger system consisted of three levels: Level-1, where decisions were made by specialized electronics within 2.5 microseconds after a collision occurred; Level-2, where specific regions of the events identified by Level-1 were analysed; and Event Filter, where entire events were analysed in full detail.

Only a few thousand events per second made it from Level-2 to Event Filter in the first run. The numbers were further reduced, by the Event Filter, to about 400 per second of potentially interesting physics events. This raw data is sent to a storage system.

The analysis of the stored data continues while the Trigger team prepares for the next run. Detector electronics are being upgraded to allow an increase in the Level-1 acceptance rate of events from 70 kHz to 100 kHz. To handle the new acceptance rate, the size of computer farms will also have to be increased.

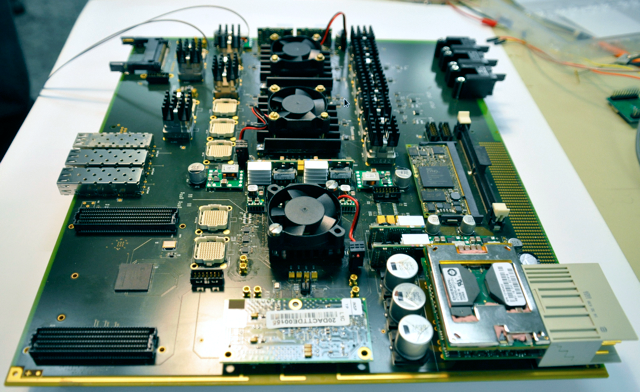

Plans to make the system more efficient include merging Level-2 and Event Filter, together with the introduction of a hardware-based topological trigger in Level-1, which raises the selectivity of events at the earliest stage. This hardware is an electronics board, developed especially for ATLAS, and is an example of how trigger hardware is evolving to meet new challenges.

The electronics board combines existing information using new criteria of selecting collision events on the basis of their angular and other correlations. The board's selection capability will be very important in identifying potentially interesting physics from the increased amount of data. For instance, the ATLAS result of Higgs decaying into two tau leptons, announced last year, could profit from this.

"The Trigger team coordinates with different physics groups in the collaboration to optimize the selections made," says Brian Petersen, former Trigger coordinator and now a Supersymmetry sub-group convenor. "With more data for analysis, we have a better chance at finding the unknown."

This year, the new Trigger structure will be tested. If all goes well, when protons begin colliding again in the heart of the ATLAS detector, the trigger system will continue to select interesting data with high efficiency in an increasingly difficult environment.