Defending Your Life (Part 3)

28 October 2014 | By

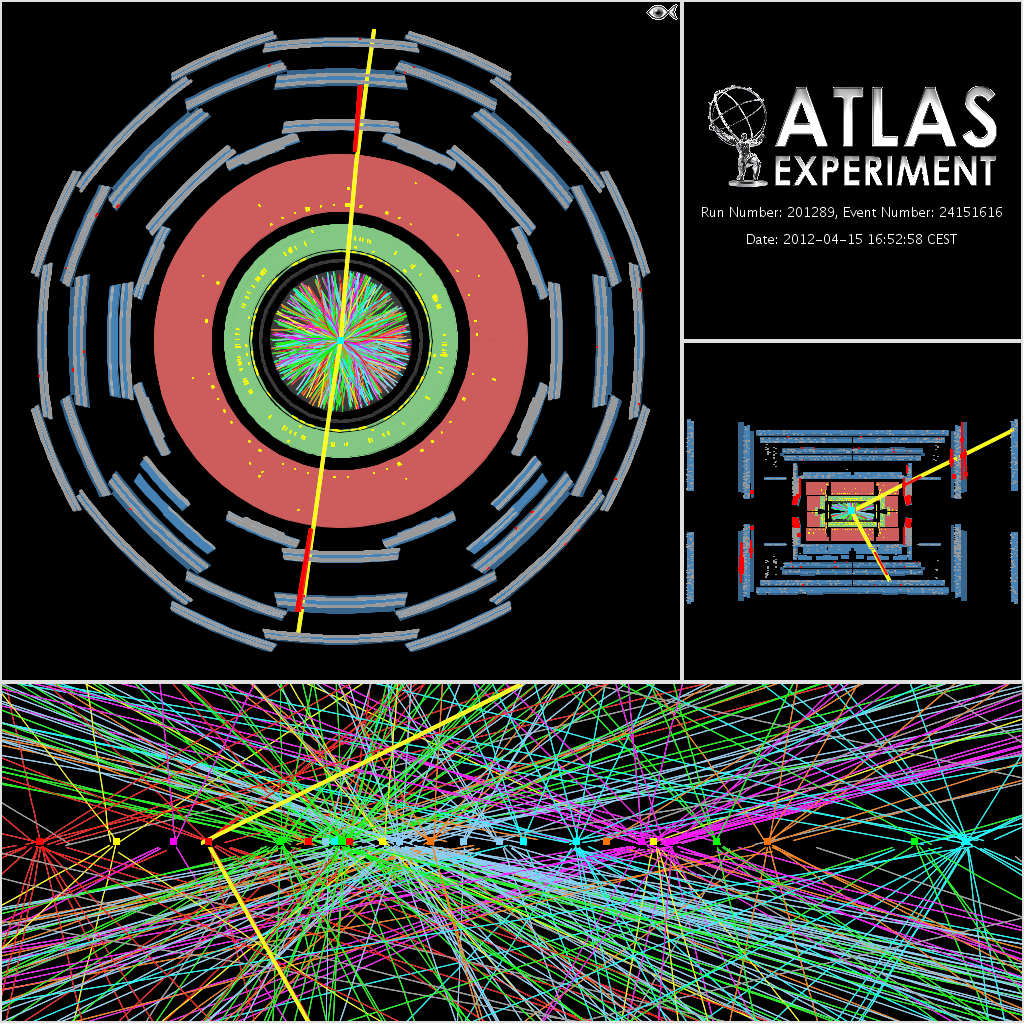

This is the last part of my attempt to explain our simulation software. You can read Part 1, about event generators, and Part 2, about detector simulation, if you want to catch up. Just as a reminder, we’re trying to help our theorist friend by searching for his proposed “meons” in our data. The detector simulation gives us a long list of energy deposits, times, and locations in our detector. The job isn’t done though. Now we have to take those energy deposits and turn them into something that looks like our data – which is pretty tricky! The code that does that is called “digitization”, and it has to be written specially for our detector (CMS has their own too).

The simple idea is to change the energies into whatever it is that the detector reads out – usually times, voltages, and currents, for example, but it can be different for each type of detector. We have to build in all the detector effects that we care about. Some are well known, but not well understood (Birks’ law, for example). Some are a little complicated, like the change in light collected from a scintillator tile in the calorimeter depending on whether the energy is deposited right in the middle or on the edge. We can use the digitization to model some of the very low-energy physics that we don’t want to have to simulate in detail with Geant4 but want to get right on average. Those are effects like the spread and collection of charge in a silicon module or the drift of ionized gas towards a wire at low voltage.

Digitization is where some other effects are put in, like “pile-up“, which is what we call the extra proton-proton collisions in a single bunch crossing. Those we usually pre-simulate and add on top of our important signal (meon) events, like using a big library. We can add other background effects if we want to, like cosmic rays crossing the detector, or proton collisions with remnant gas particles floating around in the beampipe, or muons running down parallel to the beamline from protons that hit collimators upstream. Those sorts of things don’t happen every time protons collide, but we sometimes want to study how they look in the detector too.

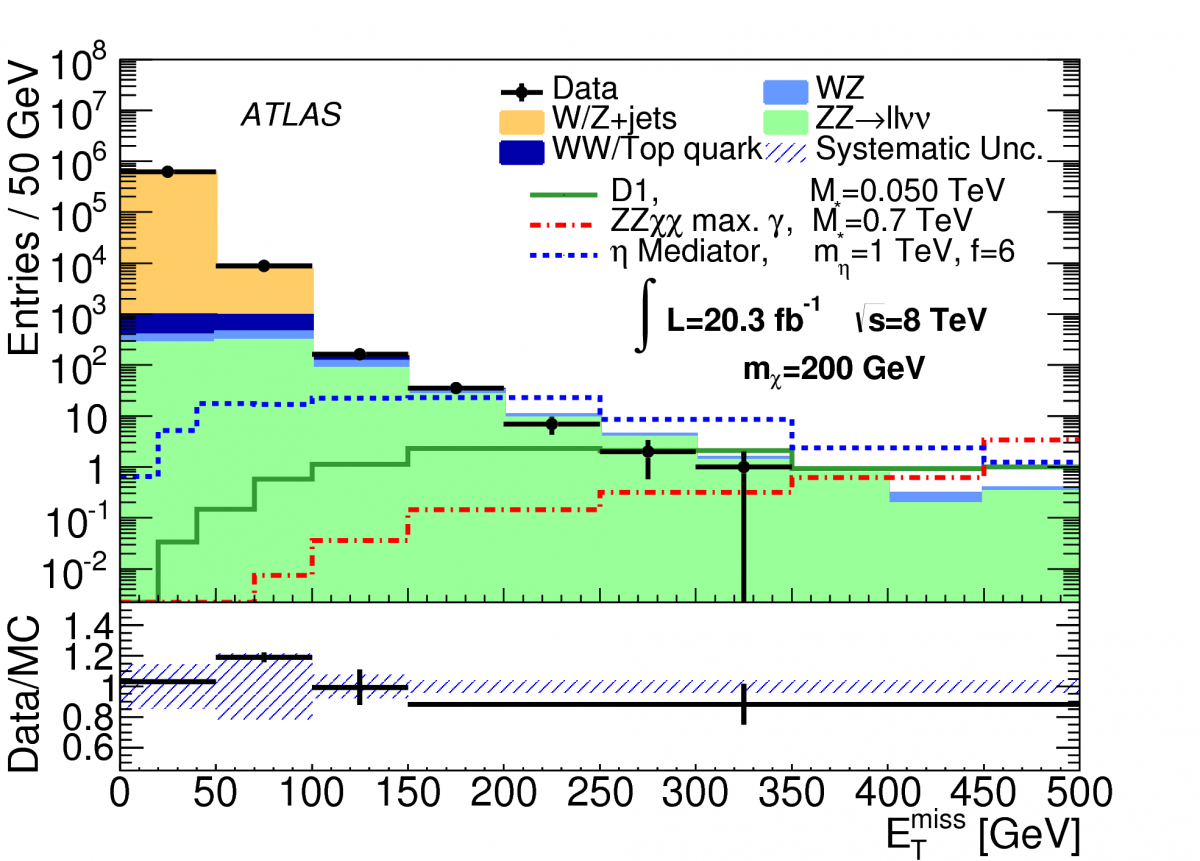

Now we should have something that looks a lot like our data – except we know exactly what it is, without any ambiguity! With that, we can try to figure out if our friend’s meons are a part of nature. We can build up some simulated data that includes all the different processes that we already know exist in nature, like the production of top quarks, W bosons, Z bosons, and our new Higgs bosons. And we can build another set that has all of those things, but also includes our friend’s meons. The last part, which is really what our data analysis is all about, is trying to figure out what makes events with meons special – different from the other ones we expect to see – and trying to isolate them from the others. We can look at the reconstructed energy in the event, the number of particles we find, any oddities like heavy particles decaying away from the collision point – anything that helps. And we have to know a little bit about the simulation, so that we don’t end up using properties of the events that are very hard to get right in the simulation to separate meons from other particles. That really is the first part of almost all our data analyses. And the last part of most of our analyses (we hope), is “unblinding”, where we finally check the data that has all the features we want – passes all our requirements – and see whether it looks more like nature with or without meons. Sometimes we try to use “data-driven methods” to estimate the backgrounds (or tweak the estimates from our simulation), but almost every time we start from the simulation itself.

The usual thing that we find is that our friend told us about his theory, and we looked for it and didn’t find anything exciting. But by the time we get back, our theorist friends often say “well, I’ve been thinking more, and actually there is this thing that we could change in our model.” So they give you a new version of the meon theory, but this time instead of being just one model, it’s a whole set of models that could exist in nature, and you have to figure out whether any of them are right. We’re just going through this process for Supersymmetry, trying to think of thousands of different versions of Supersymmetry that we could look for and either find or exclude. Often, for that, you want something called a “fast simulation.”

To make a fast simulation, we either go top-down or bottom-up. The top-down approach means that we look at what the slowest part of our simulation is (always the calorimeters) and find ways to make it much, much faster, usually by parameterizing the response instead of using Geant4. The bottom-up approach means that we try to skip detector simulation and digitization all together and go straight to the final things that we would have reconstructed (electrons, muons, jets, missing transverse momentum). There are even public fast simulations like DELPHES and the Pretty Good Simulation that theorists often use to try to find out what we’ll see when we simulate their models. Of course, the faster the simulation, normally, the fewer details and oddities can be included, and so the less well it models our data (“less well” doesn’t have to be “not good enough”, though!). We have a whole bunch of simulation types that we try to use for different purposes. The really fast simulations are great for quickly checking out how analyses might work, or for checking out what they might look like in future versions of our detector in five or ten years.

So that’s just about it – why we really, really badly need the simulation, and how each part of it works. I hope you found it a helpful and interesting read! Or at least, I hope you’re convinced that the simulation is important to us here at the LHC.