ATLAS copies its first PetaByte out of CERN

1 November 2006 | By

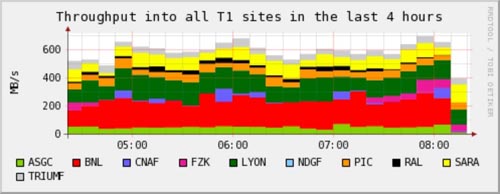

On 6th August ATLAS reached a major milestone for its Distributed Data Management project - copying its first PetaByte (1015 Bytes) of data out from CERN to computing centers around the world. This achievement is part of the so-called 'Tier-0 exercise' running since 19th June, where simulated fake data is used to exercise the expected data flow within the CERN computing centre and out over the Grid to the Tier-1 computing centers as would happen during the real data taking. The expected rate of data output from CERN when the detector is running at full trigger rate is 780 MB/s shared among 10 external Tier-1 sites(*), amounting to around 8 PetaBytes per year. The idea of the exercise was to try to reach this data rate and sustain it for as long as possible. The exercise was run as part of the LCG's Service Challenges and allowed ATLAS to test successfully the integration of ATLAS software with the LCG middleware services that are used for low level cataloging and the actual data movement.

When ATLAS is producing data, the Tier-0 centre located at CERN will be responsible for performing a first-pass reconstruction of the data arriving from the Event Filter farm, thus producing Event Summary Data (ESDs), Analysis Object Data (AODs) and event Tags. In this current exercise and in one carried out in January, we were able to successfully operate a Tier-0 prototype with increasing detail and realism, to reach nominal internal dataflows and sustain them up to a period of about one week.

Once the ATLAS data has been processed at CERN, the original data and reconstructed data products used for physics analysis must be shipped out around the world for consumption by physicists. The ATLAS distributed data management software, Don Quijote 2 (DQ2), runs at each of the 10 Tier 1 computing centres that accept data from CERN. When DQ2 finds a request to move data to the site it is responsible for, it transfers and catalogs the data at the site using the underlying Grid middleware. From these large computing centres, DQ2 then moves some of the data to smaller regional ATLAS labs and institutes (Tier 2 centres) for analysis.

Since the start of the current Tier 0 exercise, the performance of DQ2 and the LCG middleware has been very satisfactory. The exercise has been used to gain valuable experience and to make several improvements to DQ2, and we are now at the stage where the system can run almost independently of any manual intervention. We also have in place extensive monitoring to report the state and any errors down to the individual file level.

(*) The 10 Tier 1 centres are: ASGC in Taiwan, BNL in the USA, CNAF in Italy, FZK in Germany, CC2IN2P3 in France, NDGF in Scandinavia, PIC in Spain, RAL in the UK, SARA in the Netherlands and TRIUMF in Canada.