Letters from the Road

18 October 2013 | By

I've been lucky to get to make two workshop / conference stops on a trip that started at the very beginning of October. The first was at Kinematic Variables for New Physics, hosted at Caltech. Now I'm up at the Computing in High Energy Physics conference in Amsterdam. Going to conferences and workshops is a big part of what we do, in order to explain our work to others and share what great things we're doing, in order to hear the latest on other people's work, and - and this one is important - in order to get to talk with colleagues about what we should do next.

The first workshop, KVNP, was a small ATLAS-CMS-Theory discussion of what new variables we should use for our searches when the LHC restarts. A lot of searches that we do use pretty simple variables like the amount of energy in the calorimeter and the momentum imbalance transverse to the beam. But there are some very clever variables that you can put together that estimate some interesting things, like the mass of a new particle that you might have just created under certain assumptions. That's a really interesting thing to be able to do, and if we discover something in 2015, then it's going to be very important to be able to estimate that sort of mass. For now, however, I left with the impression that we really should be doing simple things at first, trying to understand very carefully what we're doing, and avoiding adding much complexity to our searches. We'll have plenty of time for complexity later on!

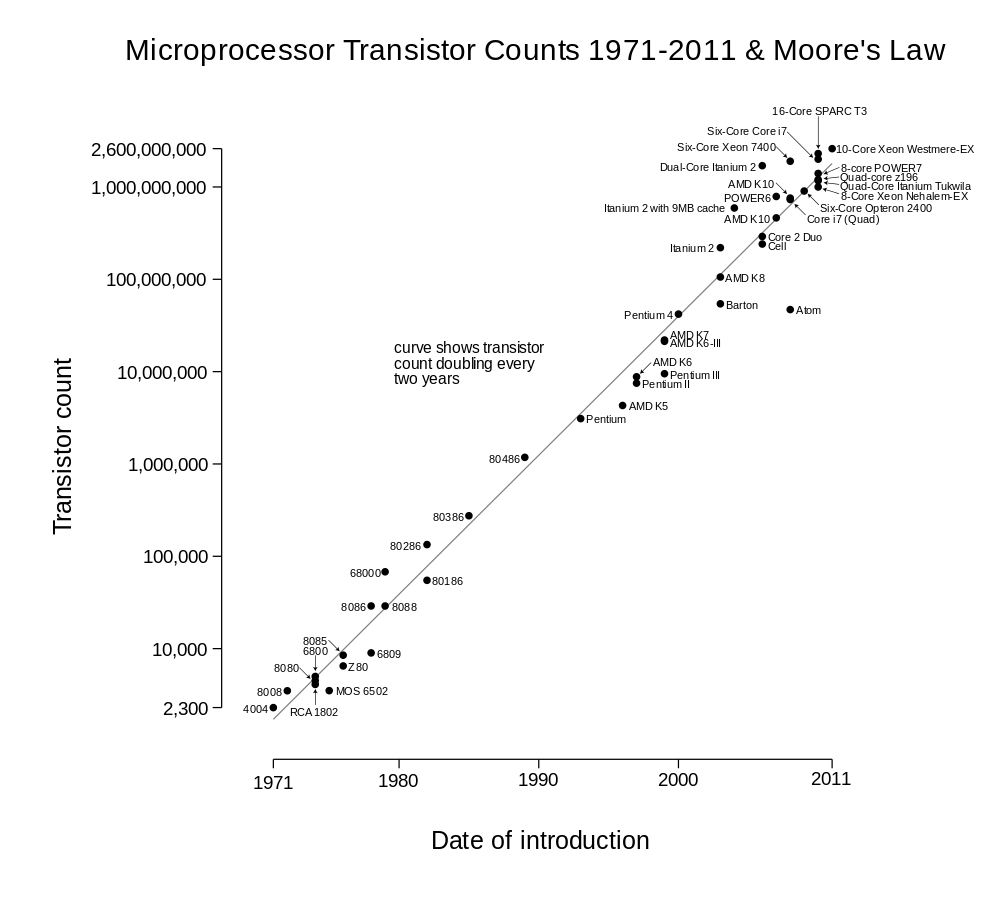

Now, up at CHEP, I'm listening to some interesting discussions about the future of computing in our field. There are a lot of difficult problems in modern computing, not the least of which is how our software should change now that we know that Moore's law continues to be correct but processor clock speed has capped out.

These talks really require a crystal ball, as there are some technologies that are soon going to be mainstream, and when betting one runs the risk of being on the losing side, a la beta tapes, laserdisks, HD-DVD, and others. Many of the people here don't think as much about physics - I would be interested to see a poll of the number of them actively working on a physics analysis, for example. But it's very interesting to hear what they have to say about computing issues, where they are far better equipped than the average physicist. The conference is a bit strange - already two talks I've wanted to hear have been cancelled, and one has been given remotely by a video connection, thanks to our government shutdown that was affecting the US labs until Thursday. But it's been fun all the same. I'll leave you with a few discussion questions that I found really quite interesting this week:

- Can ATLAS or CMS run without a trigger by 2022? The rate off of the detector is something that we might be able to handle (hint: the tracker seems to be the biggest problem!)

- How do you define "Big Data"? If you do it by simply the volume (in GB) of data, then you're actually just describing the budget!

- Are we heading for a world where there is custom code running on different system architecture, or will the language and compiler development catch back up and provide homogeneity?